I got to pull some Ethernet taps out of the closet to diagnose a problem last week, and was inspired to bang some thoughts about taps into my keyboard. So here they are: More than you probably want to know about Ethernet taps.

Ethernet taps are in-line devices used for capturing data between two systems. They're not the weapon-of-choice when troubleshooting application layer problems because mirror functions on switches (SPAN) and capture mechanisms on servers (tcpdump / wireshark) are much more convenient here.

Where taps really shine is for the sorts of problems which might cause you to mistrust one or both of the systems on the ends of a link.

Say we've got a performance-related packet delivery problem. It's possible that the switch mirror function will claim that a frame was sent when in reality the frame was dropped due to a buffering problem downstream of the mirror function.

On the other hand, there are times when packet captures performed at the server claim packets went undelivered or were delivered late when in reality the packets were delivered just fine.

You also might want to save your (limited) mirror sessions for tactical work, rather than burning up this resource for a long-term traffic monitoring solution.

So, what do you do when mirror (SPAN) and capture (tcpdump) disagree about what packets were delivered, or you want to deploy a long-term strategic monitor? You put a tap in the link.

Optical Taps

The simplest form of Ethernet tap is a fiber optic splitter. Light enters the splitter via the blue fiber on the left, and is split into the blue and red fibers on the right:

The following photo illustrates the function. Light from my flashlight is appearing in both the red and blue fibers.

One of those fibers will be connected to the analysis station, and the other one to the destination system (the other end of the link).

Optical taps are generally packaged in pairs: one for "Northbound" traffic and one for "Southbound" traffic on the link. Here's a typical assembled tap:

Optical taps don't require any power, and they're generally safe for long-term deployment on critical infrastructure links. They can be used for 10Mb/s, 100Mb/s, 1Gb/s or 10Gb/s links (it's just glass afterall), so long as the fiber is the correct type in terms of modality and core diameter.

Taps like this generally express the amount of light delivered through the link vs. delivered to the analysis station in terms of db loss. You'll want to consider your cable lengths and light power budget when installing one of these taps. When tapping in-room links, I've never had any problem using splitters that divide the light power evenly.

Passive Copper Taps

Passive copper taps for 10Mb/s and 100Mb/s links work pretty much the same way as optical taps, but with electrons, not photons.

You can

build a totally passive tap for just a few dollars worth of parts from a hardware store. The result is a totally passive tap that requires no power and looks like this:

These taps are effective, but I'd think twice about using them in a production environment. Playing games with the signal in this way can lead to unpredictable results, maybe even broken equipment.

Update 1/26/2012 - duaneo shared this picture of his passive tap panel:

.JPG) |

| duaneo's tap panel |

Apparently it's been doing tap duty at a financial services provider for years without issue. He's got it fed into a linux system "crammed full of NICs bonded together." Nifty, thanks for sharing!

Commercial passive taps generally work the same way, but instead of connecting the tap ports directly to the forked traces on the circuit board, they run the tapped traces into some high-impedance ethernet repeater equipment. The signal is then repeated at full strength by powered transmit magnetics. This minimizes degradation of the link under test, and ensures that a wiring mistake on the tap ports can't knock out the link.

These sorts of taps only require power to produce the signal for the analyzer. Power loss at the tap will not impact data flow on the link under test. Accordingly, they're safe to use for long-term deployment on critical infrastructure links.

Gigabit Copper Taps

It is not (AFAIK) possible to passively tap gigabit copper links. The problem here is that with 1000BASE-T links, all copper pairs (there are four of them) are simultaneously transmitting and receiving. The stations on each end of the cable are able to distinguish incoming voltages from outgoing because

they know what they've sent. They subtract the voltage they've put onto the wire pairs from the voltage they observe there. The result of this subtraction is the voltage contributed by the other end, which is the incoming signal.

An intermediary on the wire (the tap) cannot make that distinction, so the voltages observed by a 3rd party are just undecipherable noise.

Tap manufacturers don't like to admit this, but 1000BASE-T taps are never passive devices. They're more like a small switch with mirror functions enabled. The Ethernet link on both ends is terminated by the tap hardware, and frames traversing the link under test are

repeated by the tap.

1000Base-T tap manufacturers mitigate the risk of installing their wares by providing redundant power supplies, internal batteries, and banks of relays which will re-wire the internals (reconnecting the link under test) if power to the tap fails.

Manufacturers use terms like "link lock", "zero delay", "zero impact", "fail safe", "totally passive" and "permanent network link" to describe their taps, but I don't believe that it's possible to implement a truly safe 1000BASE-T tap. If I'm wrong about this, please enlighten me in the comments!

Consider some

confusing language (

new version of the manual here) used by the manufacturers:

The major features of the CA full-duplex Copper TAPs are:

- Passive access at 10/100 or 1000 Mbps without packet tampering or introducing a single point of failure

Then, later in the same document:

With a 10/100/1000 Mb Copper TAP, the TAP must be an active participant in the negotiated connections between the network devices attached to it. This is true if the TAP is operating at 10, 100, or 1000 Mb. Power failure to the TAP results in the following:

...

- If you are not using a redundant power supply or UPS or power to both power supplies is lost, then:

...

- The TAP continues to pass data between the network devices connected to it (firewall/router/switch to server/switch). In this sense the TAP is passive.

- The network devices connected to the TAP on the Link ports must renegotiate a connection with each other because the TAP has dropped out. This may take a few seconds.

Using the term "passive" to describe a system where the tap actively terminates the Ethernet link with both end stations, and where the frames are passed through moving parts (relays) makes my head spin.

Here's a shot of the relays in that "passive" tap:

Interestingly, this tap uses a

bel MagJack, which packages the RJ45 jack and the Ethernet magnetics into a single unit. I gotta confess that I'm a little puzzled about how the relays do their job here, because the MagJack doesn't provide direct access to the Ethernet leads, only to the magnetics. I guess running the integrated magnetics back-to-back (through the relays), without a contiguous Cat5 cable is acceptable?

If anybody from Network Instruments (Hi Pete!) wants to chime in, I'd love to hear how/why this works. Sorry about bashing your marketing there, but the other guys are about to get their turn...

Update (1/25/2012): I pinged Rich Seifert with the question about back-to-back MagJacks. Rich said:

Yes, it is possible to "pass through" an Ethernet connection (10, 100, or 1000BASE-T) using a pair of back-to-back transformers. There will be some loss, so it will reduce the maximum allowable link length from the 100m specification, but in most environments this is not an issue.

Another interesting thing about this tap is how the board is setup to build many different products. On the left are a pair of SFP connectors (you can see one of them in the image) that are unused on my model. Around back are solder pads for a SO-DIMM memory holder, presumably this is the packet buffer for the aggregation tap models. Nifty.

Here's

another example of misleading marketing:

With the Gig Zero Delay Tap, power glitches and failures no longer mean dropped packets and lengthy renegotiation sequences. Your network operates more smoothly and your critical business applications remain responsive with the Gig Zero Delay Tap in your monitoring infrastructure.

Sounds great, right? They fail to mention

how that really works:

Warning! ... This product contains a NiMH battery. Consult your shipping carrier regarding regulations on safe shipping of NiMH batteries.

So, no dropped packets on power failure (until the batteries run out). I don't know why these sort of claims tend to be so over-inflated, but the

problem is nearly universal:

Operates transparently.

...

Can be left in place permanently.

...

Provides continuous network data flow if the power fails.

Just like the first one, this tap will go "click!" as the relays re-route traffic during a power failure. Sure, it's milliseconds-fast, but does that matter? An MS Windows based server will destroy all of its TCP sessions in the face of momentary link loss. Spanning tree might have to reconverge. Routing information learned over the link will be lost. That "click!" can be devastating.

I believe that

this tap is actually exactly the same thing as the

first tap I mentioned, but with a different

paint and

stickers. I prefer to buy my gear from the real manufacturers.

I want to take this opportunity to give a shout-out to the folks at

Network Critical. I just perused some of their product materials and I didn't find anything misleading in this regard. Way to go, guys!

I've used taps from all of the companies I linked above. They were all good products. I just wish the marketing departments were more forthcoming about what's really going on inside the boxes. Having to figure out that "zero delay" means "onboard battery with limited lifetime" is ridiculous.

Active taps scare me. They're a point of failure that doesn't

have to be there.

Aggregator Taps

All of the taps I've described so far are "full duplex" taps. This means that you need two sniffer/analyzer ports in order to see bidirectional data. One tap port will deliver "Northbound" frames to the sniffer, and the other will deliver "Southbound" frames.

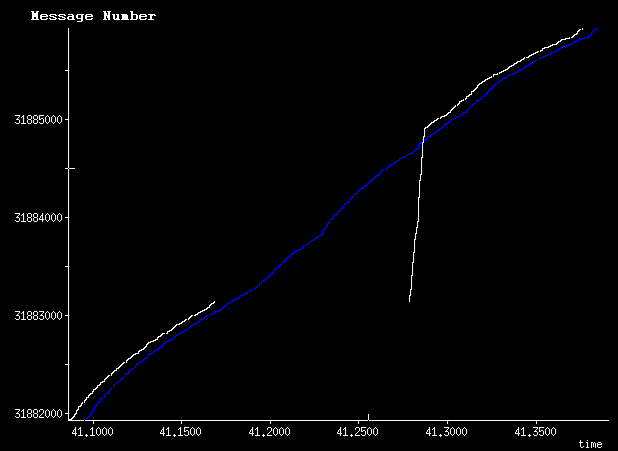

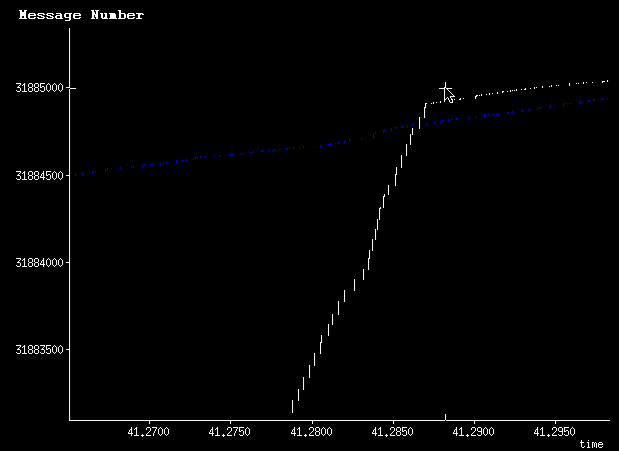

Aggregator taps on the other hand, combine northbound and southbound data into a single analyzer interface. This is convenient, because aggregating the data can be a hassle, but aggregation inside the tap introduces some downsides:

- Aggregator taps are more expensive because they include processor, buffering and arbitration logic not required by full duplex taps.

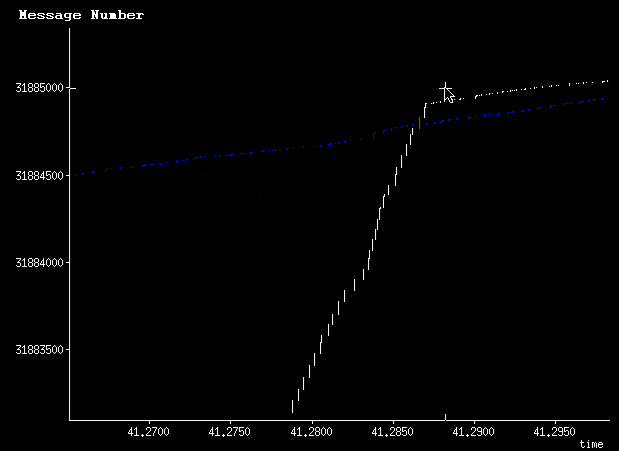

- Aggregator taps will delay and possibly drop frames because the analyzer port is oversubscribed 2:1.

It's interesting to note that

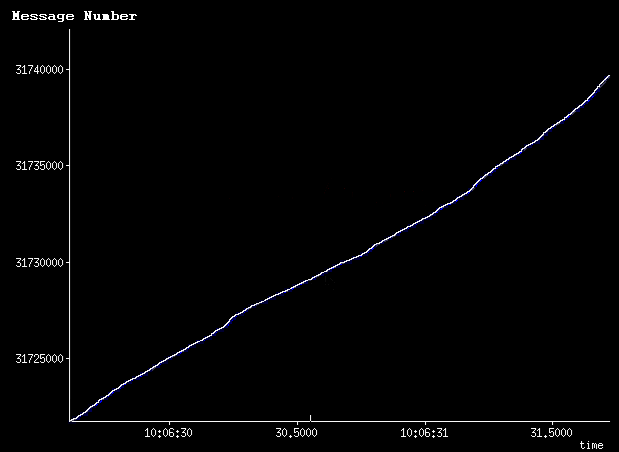

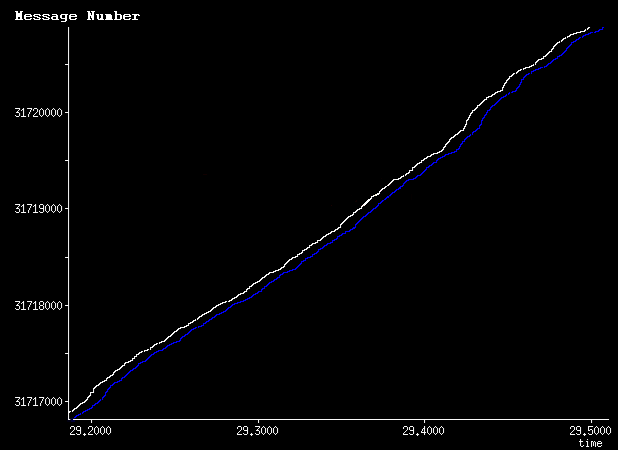

some of the "zero delay" taps tout their large packet buffers on the analyzer ports. If data isn't being delayed, what's this huge packet buffer for? In this case, it's "zero delay" refers only to the link under test, and it doesn't really mean anything about delay... It's the marketing term for relays and batteries. The fact is, tap output data may be delayed up to 160ms (2MB buffer draining at 100Mb/s) with this device. Does it matter to you? Maybe. Does it sound

anything like zero delay? I'd wager not.

Aggregator taps make good sense when dealing with sub-rate services (100Mb/s service on a gigabit link), or when monitoring heavily uni-directional applications like

financial pricing feeds or IPTV services. If it's likely that the combined bidirectional traffic will exceed the speed of the analyzer port, then I prefer to use full-duplex taps with a dedicated

tap aggregation appliance.

Unlike full duplex taps, an aggregator tap is always a powered device that generates a fresh (repeated) copy of each frame for the analyzer. This does not mean that an aggregator tap can't be passive. It can be, just like the 10/100 copper tap I diagrammed above. So long as the path of the link under test only crosses passive components, the tap is passive, regardless of whether the copied frames are fed into an aggregation engine.

Some aggregator taps are configurable: they can operate in either full-duplex mode or they can provide aggregated traffic to two different analyzers.

Tap Chassis

Lots of manufacturers are offering chassis of various sizes that can do tapping, aggregation and (sometimes) analysis all in one box. While each of these functions are useful, I shy away from these appliances.

Critical network links don't tend to be all in one spot. Deploying one of these appliances requires that each interesting / critical network link needs to be patched back to the same point in the data center. A mechanical problem (fire, rack falling through the floor, etc...) could hurt every one of these critical links if you run them through the same physical spot in your facility.

Rather than bringing each link over to the One True Chassis, I prefer to bring small inexpensive taps to each link. Tap the links in situ, and send your copy of the data back to the analysis gear, instead of the other way around.

Build Fiber Gig Links

Because you can't passively tap a gigabit copper link, I think it's irresponsible to build 1000BASE-T links in critical parts of the infrastructure where you're likely going to want to do analysis. This means you should always specify optical modules for security edge and and distribution tier devices. Sure, the "firewall sandwich" at the internet edge all lives in a couple of racks, and copper links would work fine. Doesn't matter. The SecOps guys will show up with some nonsense security appliance/sniffer/DLP/IDS/EUEM device that they want to put inline. If your links are copper, tapping them will add points of failure. There's no reason to take this risk.

Layer 1 Switches

These are neat products that basically boil down to electronic patch panels. They're great for tactical work.

Imagine a remote office building with 50 switches in various wiring closets that all patch back to a central distribution tier. You can enable a mirror function on all 50 switches, and patch the mirror port back the the L1 switch.

Also plugged into the L1 switch, you'll have an analyzer port.

When there's a network problem in closet 23, you log into the L1 switch and configure it to patch port 23 to the analyzer station. The switch makes some clicking noises, and now your analyzer is plugged into closet 23's mirror port, without you having to visit the site and build that patch. Pretty nifty.

Tap Aggregators

There are several companies selling tap aggregators. These products allow you to distribute data from several data sources (taps, mirror ports) into several analyzer tools. Most of them allow you to filter traffic coming from sources or flowing to tools, snap payloads off of packets, etc...

Use cases include:

- Feed HTTP traffic from all sources to the web server customer experience monitor.

- Feed voice bearer traffic (without payloads for compliance purposes) to an IP telephony quality scoring system.

- Monitor SMTP traffic from all ports only to the confidential data loss analyzer system.

In addition to those strategic uses, you might have a couple of tactical diagnostic tools plugged into the aggregator. When problems arise, you configure the aggregator to feed the interesting traffic to the analysis tool.

Change Control

I've successfully made the case that everything on the "copy" side of Ethernet taps should fall outside the scope of change control policies in a couple of organizations. This has allowed me to perform code upgrades on

really smart taps, reconfigure L1 and aggregation switches etc... during business hours.

It's critical that these tools be available for tactical use during business hours, but most large enterprises won't take the perceived risk of allowing this type of work unless you plan ahead. Start planting the seeds for mid-day tactical work during network planning stages. Get exceptions to change control coded into "run books", "ops manuals", "architectural documents" or whatever documentation makes your organization tick.